A Revelation

My personal experience with Neural Networks is that everything became much clearer when I started ignoring full-page, dense derivations of backpropagation equations and just started writing code…(I don’t believe [a lot of math] is necessary and it can sometimes even obfuscate simple concepts).

That was Andrej Karpathy, back in 2016 (I think) in his Hacker’s Guide to Neural Nets. To be honest, I haven’t even read that particular piece. It’s very long and is meant to be a primer, which I don’t need at this point. I came across it because I had just finished reading Mr. Karpathy’s post Deep Reinforcement Learning: Pong from Pixels. That piece was a revelation and exemplifies that quote. In it, he explains how he trained a fully connected NN to play pong using reinforcement learning. Just as I’m planning to do with my checkers game, he trains a fully connected NN via reinforcement learning to play pong reasonably well with no prior knowledge other than game rules. He does this with, as he says, a mere 130 lines of Python code.

Mr. Karpathy is a great writer, and he explains most of what he’s built using straightforward concepts rather than abstruse mathematical formulas. Among those concepts is how he’s doing the reinforcement. It was a search for how to do reinforcement in sparse reward scenarios that led me to the article in the first place, and the solution he presents is jarring in its simplicity.

Until I read that article, I had been thinking over how to reward (or penalize) my AI. Obviously winning a game is a reward and losing is a penalty, but I had also thought that it would make sense to incentivize things like jumping an opponent and gaining a king and to penalize getting jumped and having the opponent get a king. I had also been considering what Mr. Karpathy refers to as the credit assignment problem. After all, how much sense would it make to reward the first move of a winning game when that move had next to nothing to do with actually winning the game?

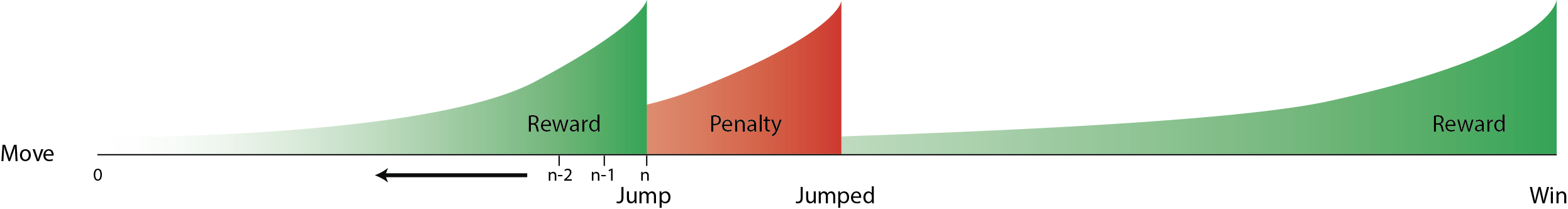

What I was considering was something like the diagram below:

I would set up a reward or a penalty for intermediate actions (jump or jumped) and a reward (or penalty) for the ultimate game outcome, win or loss. Furthermore, to accommodate credit attribution it seemed that it would make sense to give a more or less “full” reward at the state which immediately generated the reward-worthy move, but then to exponentially drop off the amount of the rewards back in time before the event in question, thus reducing how much an event that really had nothing to do with the action gets rewarded.

My solution seemed like a reasonable approach but there are a number of considerations that made me suspect it would be daunting to implement.

- How much to reward, say, a jump vs. a win?

- How to penalize opponent actions (such as gaining a king) that aren’t necessarily even attributable to any single event that the player initiates?

How to vectorize reinforcement with a cost function that will probabbly vary from state to state?This one isn’t really a factor since the process is going to be largely stochastic, not batch.- Does it really make sense to explicitly build this sort of “strategy” into the game? If one decides it’s ok, how far to take it? It feels out of the spirit of having the system learn itself.

These are all questions that ultimately have good answers, but in describing how he built his Pong AI, Mr. Karpathy renders all of them moot. His solution has the following two characteristics:

- The only event that generates a reward or penalty is winning a point or losing a point.

- Rewards and penalties are attributed at full force to every single state and decision leading up to the event that caused the reward.

Regarding point 1, it’s helpful to observe that pong is conceptually much simpler than checkers. In checkers, a player could choose to, for instance, king a piece an that would presumably generate a reward. But that move at that time could actually hurt the player’s overall position in the game, ultimately even leading to a loss. A single point in Atari Pong, however, does not share this quality. Winning a point unambiguously improves the player’s position in the game and ultimately brings the player numerically closer to an overall victory. So in that regard, it seems much more clear cut to use only the outcome of individual points as reward/penalty generators. But he could very well have tried to find intermediate rewards, for instance rewarding moves that position the paddle closer in 2D space to the ball. Or contacting the ball at a particular place on the paddle. He didn’t, and instead awards rewards and penalties to the one most fundamental and clear-cut event in the game, a won or a lost point.

He goes further by also fully attributing the reward/penalty equally to every state in that point, regardless of the degree to which it “deserves” credit for the outcome. So while some inconsequential move at the very beginning of a point may get undue credit for the subsequent win or loss, if it was truly inconsequential, the thousands and thousands of subsequent points will pile win and loss reinforcement in equal measure over the many times the system finds itself in a similar situation. This should add up to a probability output that nets out to just about 0.5. Whereas decisions that are truly advantageous to a given state will begin to accrue more wins than losses, and gradually, that state/decision will accumulate more positive reinforcement than negative, and push the probability away from 0.5 in one direction or the other.

Update 6/11: I was wrong about attributing the reward/penalty equally for each state leading up to the reward. I’ve been reviewing his code in detail, and in fact for each state further back in time, he discounts the reward or penalty by 0.99. I was planning a steeper curve, but the principle is there nonetheless.

His solution is elegant and beautiful, and as I saw when I ran his code, it works. But there are some major caveats. First, while he states up front that his system is both tiny and not significantly tuned, after about 30,000 games played over the course of 2 days against a rule-based AI, it was still only winning 40% of the points it played.

Also, his output is comically simple: one output node corresponding to the policy for whether to move the paddle up or down. There’s not even any way to just leave the paddle where it is (and thus, his AI looks like it drank too much coffee). Checkers has much more complicated output. It has the possible (legal and illegal) moves of a varying number of player pieces. Coming up with an effective loss function and gradient will require a good deal more thought.

But he does one thing with his output (simple as it is) that I confess, I might not have considered had I not read the article. Having only done straight-up classifiers to date, what I’ve always done is take the the output which can range from 0 to 1 and if the value is >0.5, classify as 1 and if ≤0.5, classify as 0. However, even in a classifier, we understand that decimal value to represent a probability that the output is a given class. In his algorithm, he takes that to its logical conclusion. Rather than toggle to either a 1 or zero, he truly implements the probabilistic model. That is, if the output is, say, 0.75, he generates a random number between 0 and 1. If that number is ≤ 0.75, he returns a 1, and otherwise returns a 0. This has the effect of reproducing the the probability represented in the output. But more importantly, in a training situation it also allows the AI to explore the domain by making moves that are not always the highest probability for a positive outcome. I will definitely be employing some more-involved version of this in the model I build.