AI Test Drive

A couple of days ago, I got all the machinery of my network in place and did my first test. I decided that for starters, using a network I wasn’t even sure worked for a problem I’ve never tackled before (reinforcement learning), I’d start with something easy: learning legal vs. illegal moves. Unlike learning to win at checkers, it’s much more similar to a basic classification problem. For any given board state, the outputs have a set of “ground truth” legal vs. illegal moves that can be used to train the network. The test framework keeps a running tally of proposed illegal moves and thus can track the percentage of proposed illegal moves out of the total proposed illegal plus legal moves. I would track the percentage of proposed illegal moves as the model got trained. If it was learning correctly, I could watch the illegal percentage slowly tick down from the random average of about 94.5%.

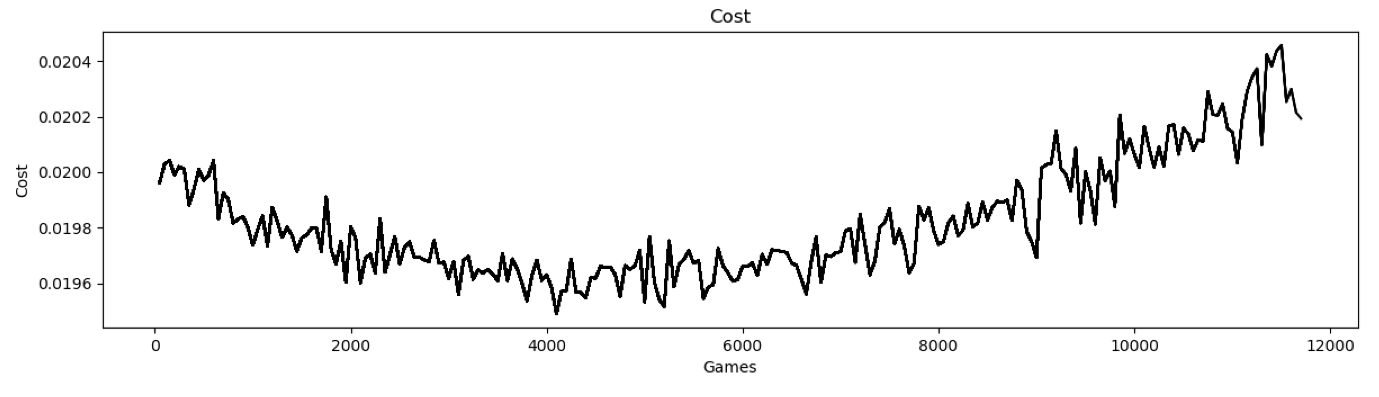

As mentioned here I started off using softmax rather than sigmoid to account for the 48 outputs rather than the single output in Pong from Pixels. And as mentioned here, I had also decided to use mean squared error for cost. And for my labels, I’d be labeling illegal moves with a zero to push them down, and labeling legal moves with the exact probability of the output to create a zero gradient (cost derivative is\(2(a-y)\)). At the start, things were a bit of a mess. I was getting getting a cost graph that over time was extremely noisy, not necessarily trending down, and most of the time eventually started trading upwards, like so:

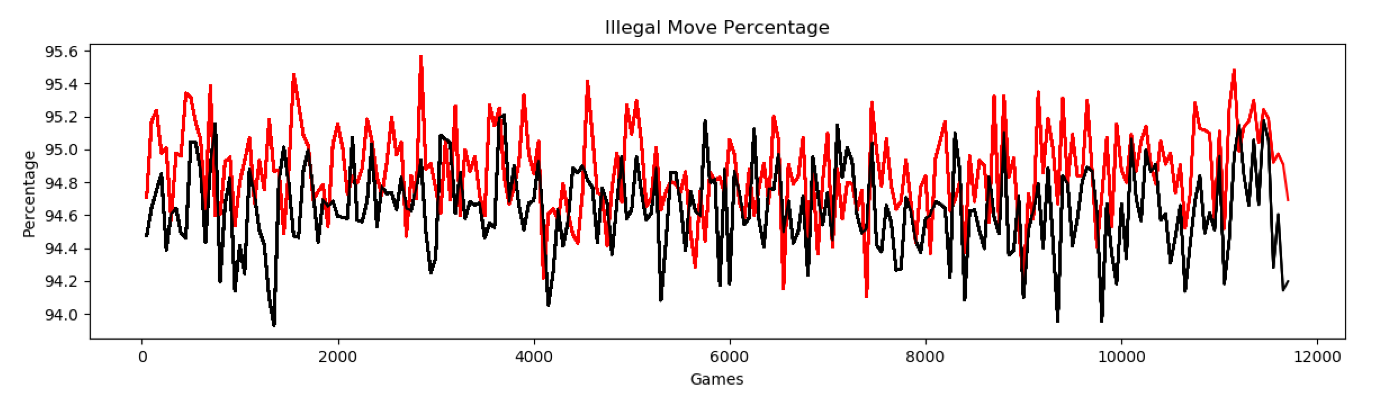

And the percentage of illegal moves (red was the model) was not budging:

I was flailing around a bit trying lots of things, and it is not lost on me now that I may have had a problem with my gradients which I did not check (and still have not). One thing I even did was add a new set of features to the model: I decide to add the 48 element array of legal and illegal moves explicitly as inputs to the model. I figured maybe the network might not have been sophisticated enough to derive those directly so I’d help it along. It made no difference.

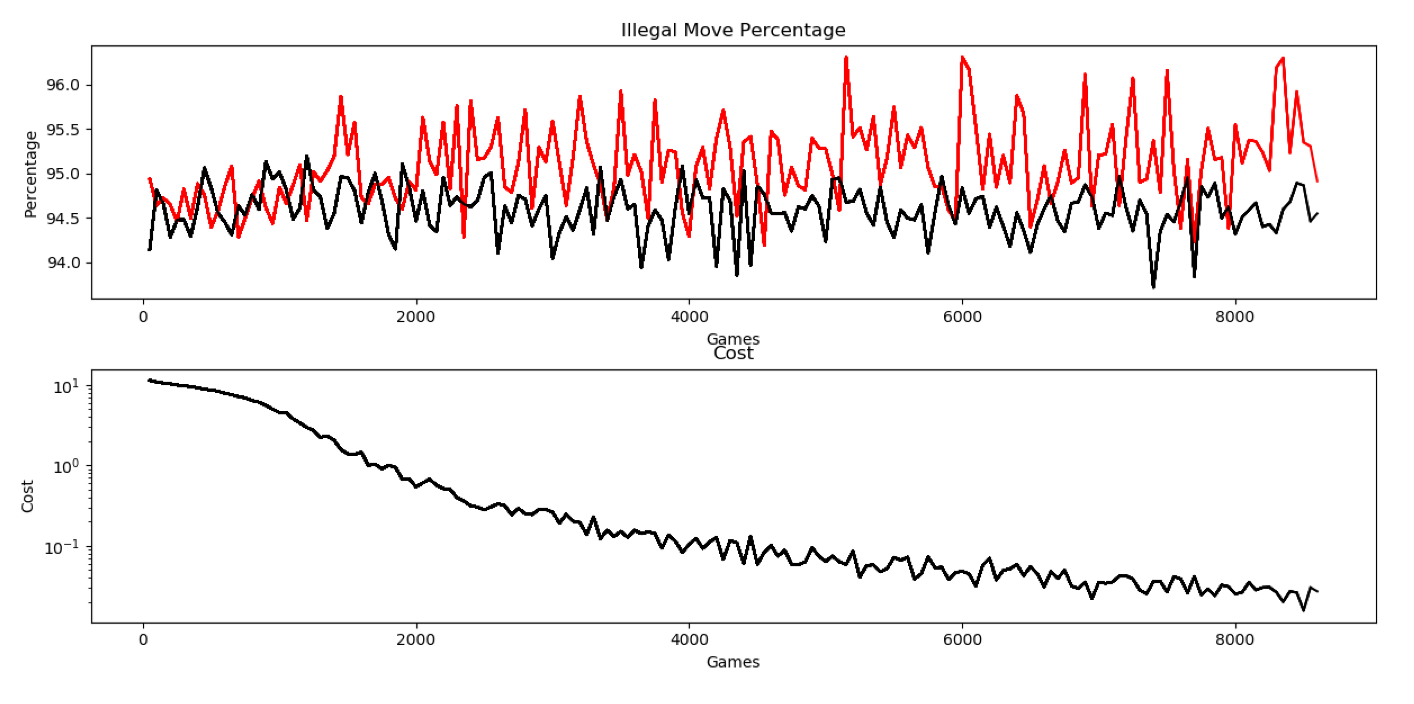

What I eventually did was to see how things worked using sigmoid. Immediately, the cost function trended steadily and noiselessly downward. I had to switch to a log y axis because at the start, the cost was seeming to flatline near zero). The problem, though, was that the illegal move percentage didn’t decrease. And in fact, it ticked up a bit to worse than random chance:

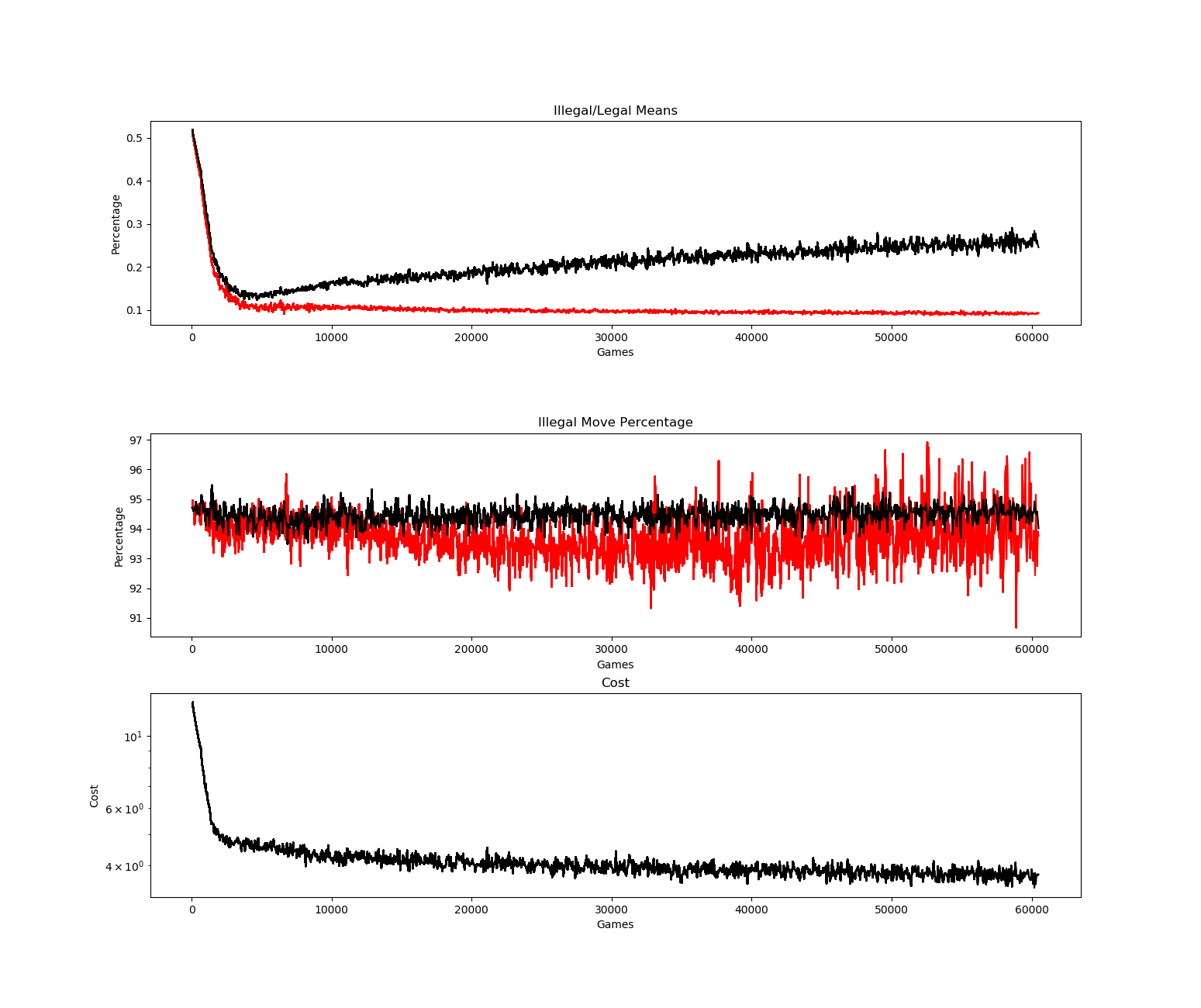

If the cost was unequivocally being optimized, then something was up. Specifically, a decreasing cost means that the value of any output that was labeled as illegal should be trending downward. If the illegal moves percentage was not changing, and was in fact going up, then one possibility was that the outputs for legal moves was trending downward as well. After all, I was explicitly not applying any gradient to legal move outputs. So I decided to track the mean value of all legal outputs and all illegal outputs after each training. Sure enough, they were both trending downward at the same rate (I didn’t save the plots of that). What that would seem to require, then, is not only providing the negative (zero) label for the illegal moves, but to reinforce the legal moves with a 1, which should push the legal move outputs up. When I did that, everything seemed to fell into place. Here are my graphs after 60,000 games:

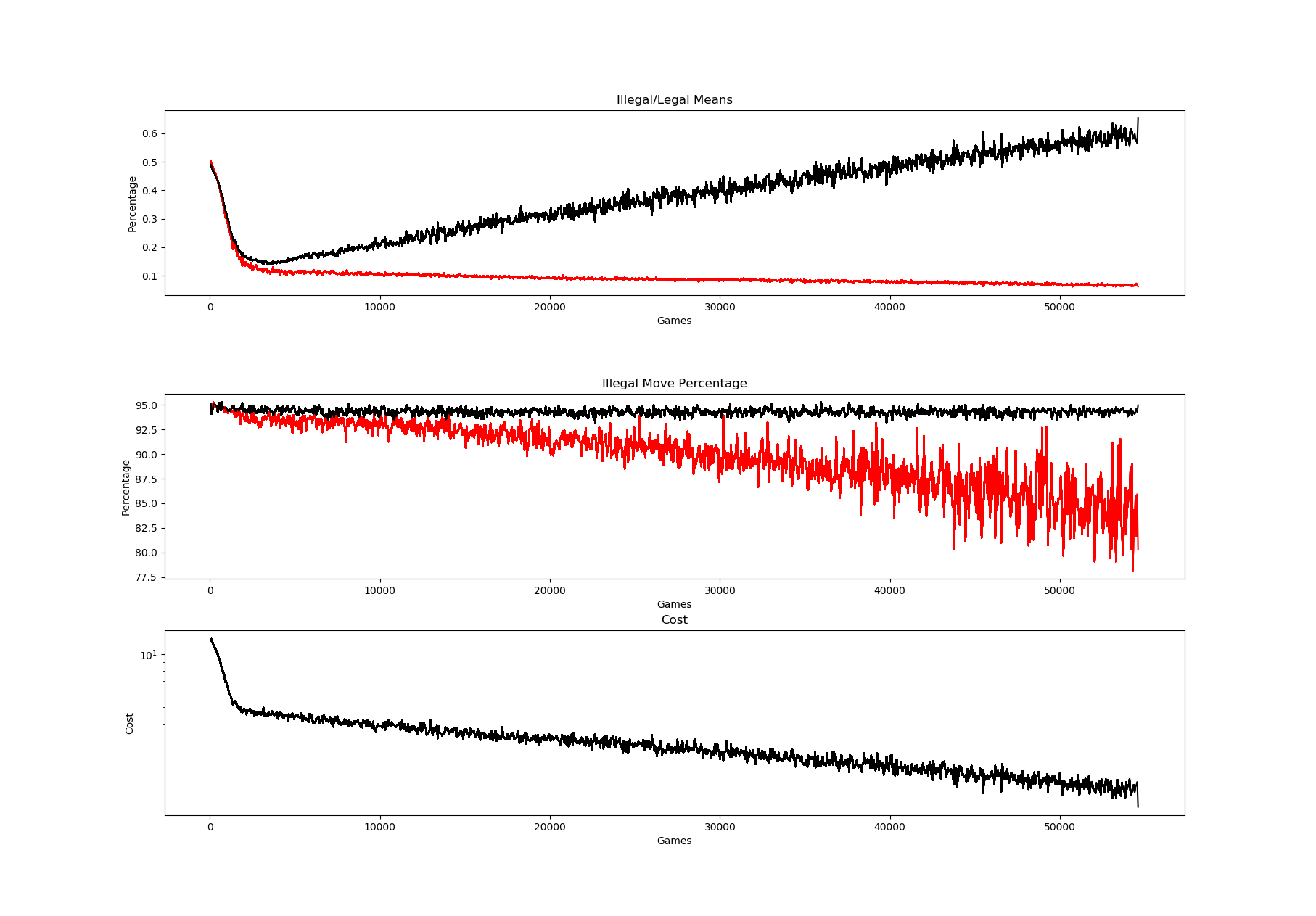

Approaching 60,000 games, the mean value (sigmoid) being output for legal moves (the graph says percentage, I know. It was very late when I got to this point) was up around .55, and the percentage of legal moves was pushing steadily downward. And the percentage of legal moves was also pushing downward.

I was very pleased with myself.

Then I remembered that I had explicitly added legal vs. illegal moves as a feature to the input. With them removed, there’s still learning and optimization going on, but the picture isn’t so rosy:

The optimization happens significantly slower and as such, the mean values of the legal moves is not pushing up nearly as quickly as before. If that were all, I think it would be fine. The problem is in the percentage of illegal moves. First, after starting (amid all the noise) to drop below random chance, eventually it starts, apparently, to push back up again. More alarming though, is that it begins with great regularity to spike way above random chance.

These are late results. The graph above is saved from the training that’s presently going on, and all this work is stuff I’ve been squeezing in after my “day job” and all various family responsibilities. I suspect I’ll eventually figure out what’s going on, but in the short run, I want to do the gradient check that I didn’t do before. Maybe there’s something wrong with that…